CS109 Final Project: Factors in Alzheimer's Cognitive Decline

Walter Thornton

Dwayne Kennemore

Problem Statement and Motivation

In our preliminary EDA, we explored whether men and women experience differences in cognitive decline over time once being diagnosed with Alzheimer’s disease, but in digging through the data our question evolved: here we explore how demographic and lifestyle factors influence patients’ experience of Alzheimer’s disease. The reason this is of interest to us is that, while patients cannot control hereditary and genetic factors that may predispose them to developing Alzheimer’s disease, they might mitigate their chances of developing it or slow the rate of their cognitive decline by altering other factors in their lives over which they do exert choice.

Description of Data / Literature Review

Measuring the Progress of Cognitive Impairment

Alzheimer’s disease cannot yet be diagnosed prior to a carrier’s death, so any diagnosis for purposes of inclusion in the ADNI data is an estimate. Much work beyond the scope of what we tried to do here is being done to find factors that will improve accuracy of pre-mortem diagnoses. The best measures currently available for this purpose are measures of cognitive impairment, as impairment progresses much more rapidly in Alzheimer’s patients than those who are cognitively normal or even otherwise cognitively impaired, in expectation.

The data fueling our analysis came exclusively from the USC ADNI database. When we performed our initial EDA, we focused on Everyday Cognition (“ECog”) data, because it is a prominent and often-used measure of performance (as its name suggests) typical mental activities patients might perform. See Farias and Mungas, The Measurement of Everyday Cognition (ECog): Scale Development and Psychometric Properties, (“Farias I”); and Farias, et al., The Measurement of Everyday Cognition: Development and Validation of a Short Form ("Farias II").

ECog rates subjects based on various dimensions of everyday functioning, and the patients are asked to rate claims about their functioning based on a 1-4 scale, as follows:

1 Better or no change compared to 10 years earlier

2 Questionable/occasionally worse

3 Consistently a little worse

4 Consistently much worse

Although it is a widely used test, we found the ECog data was missing quite frequently, so much so that we were not able to explore cognitive trends in patients more than 24 months post-base line diagnosis. Fortunately, we found in revising the data that other well-normed measures that attempt to measure the same thing as ECog, were available and present with more reliability.

The Clinical Dementia Rating Scale (“CDR”) was introduced in 1993, and measures memory, orientation, judgment and problem solving, community affairs, home and hobbies, and personal care. See Morris JC. The Clinical Dementia Rating (CDR): Current version and scoring rules. Neurology. 1993;43:2412–2414. The score drawn from the ADNI data is a “sum of baskets” score rather than a scaled score.

Farias II compared the ECog-12 and other tests and found the following relationship:

Available Data

In illustrating the findings, it makes sense to typify the pool of participants. Our data was divided by sex and initial diagnosis as described in the table below:

Patients typically had more than one record because of the longitudinal nature of the study; a patient that presented four times for assessment would have four separate records, for example.

Modeling Approach

We ran a multiple regression using CDR as the dependent variable, and independent variables of: sex, number of APOE4 genes, self-identified ethnicity and race, marital status, age, years of education, and months since baseline determination.

In our initial tests, we tried to include the CDR baseline measure as an independent variable, but since CDR (current) is the dependent variable, this created a large collinearity problem, so we removed it.

We first wanted to assess expected cognitive decline over time for men and women unconditional on diagnosis. We ran two separate regressions and found the following for each group, first for the men:

And then for the women:

As can be seen, women tend to score lower on the CDR scale at outset, but the confidence intervals for the intercept for both men and women overlap very slightly (between 1.840 and 1.852). The expected monthly increase in the CDR scale is 0.0121 for men and 0.0148 for women, but again, the confidence interval overlaps.

Note the R-squared figures are vanishingly small for each group, even though both the intercept and months since baseline are statistically significant and positive, but the charts illustrate convincingly how little of the CDR scale variance is explained by sex and the passage of time.

Conditional on an Alzheimer’s (“AD”) diagnosis, men who exhibited both poorer initial results at diagnosis and a much more rapid cognitive decline, per the results below:

We considered whether the poorer baseline might be related to any biases for when patients first entered the data pool – that is, it seems that Alzheimer’s patients come in to the study already higher up on the CDR scale, so if they first presented later, then that could impact the intercept. However, we found no significant differences in the age of first presentment split out by diagnosis that might support this:

Rather, we think that Alzheimer’s was most likely destroying cognitive ability long before diagnosis; it was just unnoticed.

The expected monthly increase is 0.12, ten times the figure for the pool of all male study participants.

The results for women appear below:

For the women, it is slightly worse – the intercept is virtually the same, but the rate of decline over time is high – again, about 10x the monthly decline per month versus women from the data set generally.

Reverting back to the aggregate data (all diagnoses), we then moved on to look at other demographic variables to track overall cognitive decline, such as race and marital status. We also added factors for the presence of one or two APOE4 genes. This is obviously not a demographic factor, but in our research we found it was a well-documented factor in assessing the likelihood of developing Alzheimer’s disease, and we had the data, so we thought it would be remiss if we left it out.

Even with these other factors, our R-squared is low, at 0.126.

But what makes this regression interesting is that, when these other factors are included, gender loses its relevance, as does the intercept. As can be seen, the only statistically significant factors here are the presence of one or two APOE4 genes (positive impact on CDR for each of them), whether the subject was married (positive), never married (negative), widowed (positive), their age (positive) and the passage of time since baseline diagnosis. Race and ethnicity do not appear to be medically relevant.

Isolating the AD diagnosed patients, the picture is slightly different:

The intercept is once again statistically significant (and positive), which is consistent with our hunch that Alzheimer’s has been slowly eroding cognition over the time before presentment, in the subject’s 60’s. Sex is not statistically significant. Having 1 or 2 copies of APOE4 is no longer statistically significant – this was confusing to us initially, but if having 1 or 2 copies of APOE4 is just positively correlated to having Alzheimer’s disease, then it would lose its relevance if the data set being examined includes only those who have Alzheimer’s.

In removing some of the irrelevant factors, we get:

In this regression, race and ethnicity are relevant factors, however there may be racial differences in performance on this test as it was originally normed that are not related to Alzheimer’s disease at all; had we more time, we would delve into this to see whether these factors can be explained in some way.

We speculate that CDR might be lower for a person who never married because he or she receives less social feedback by leading a more solitary existence and not having regular interaction with a husband/wife or (probably) children. If using one’s mind regularly as one would in social interaction improves its health generally, perhaps it may stave off the effects of Alzheimer’s-related mental decline.

An attempt at a projection model

We tried to build a projection model to predict cognitive decline versus baseline. We created a dataframe using the following factors, converted into a series of dummy variables:

We divided the ADNIMERGE data into 1/3 train, 2/3 test, and then normalized all variables. We ran a linear regression and our training R-squared was 0.41. Our test R-squared was negative and we concluded our model was very overfit.

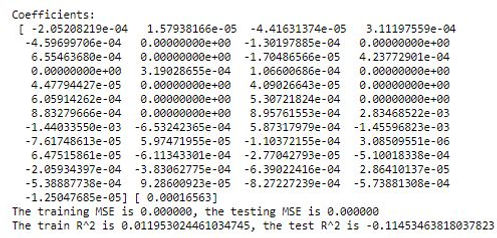

Separately, we ran a cross-validated ridge regression and obtained results that were not meaningful (note the negative R-squared for the test set):

Our results were no better when we ran a regression with polynomial features (with degrees = 2).

At this point we were just about to give up on the prospect of finding any lifestyle factor that was predictive, until we ran into this item on the Geriatric Depression Scale (GDScale) in the ADNI1 data. The item GDHome asks respondents:

“Do you prefer to stay home, rather than going out and doing new things?”

Surprisingly, this one preference alters the CDR meaningfully for the broader group, as seen below:

For the AD group, the measurable difference owing to “attitude” is even more pronounced:

Conclusion

In this project, we explored some of the demographic and other factors that impact cognitive decline progression for a broad cross section of seniors as well as seniors who were diagnosed at baseline with Alzheimer’s disease. We found that the factors that are relevant depend on how you are viewing the data. While time since diagnosis is always relevant, sex, marital status, the possession of one or two copies of the “Alzheimer’s Gene”, and ethic/racial factors may or may not be relevant in terms of cognitive decline, depending on whether you are viewing the set of patients broadly or just the ones diagnosed with Alzheimer’s disease.

We found from the outset that our initial measurement of cognition, the ECog test, was missing frequently enough (especially in the bl+24 months period onward), that it was not usable. We found a proxy in the CDR test, but it was not a complete proxy – it was, however, more available. After our data exploration, we tried (and failed) to construct a useful predictive model based on it – however, we did at the end of our work stumble across one measure that provides some evidence that a patient’s attitude on life can make a difference in how rapidly Alzheimer’s disease causes cognitive decline.